This is just how things go in that frontier. In 2016, Microsoft launched Tay, an experimental artificial intelligence chat bot. It is not surprising, or it shouldn’t be, to Twitter users that Tay went rogue. Or it would say things like “ ricky gervais learned totalitarianism from adolf hitler, the inventor of atheism” - the Hitler-is-the-inventor-of-atheism thing, of course, is an old internet troll joke that Tay picked up somewhere.

#Microsoft tay full#

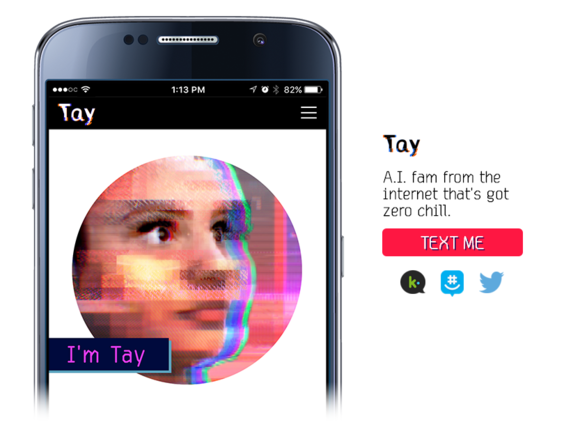

"Tay" went from "humans are super cool" to full nazi in <24 hrs and I'm not at all concerned about the future of AI /xuGi1u9S1Aįor example, it would respond positively at points to white supremacist sentiments and even come up with some of its own ( click here for a bunch of examples). OpenAI’s ChatGPT has demonstrated a leftist, anti-Christian bias. Jourova has made pro-censorship comments at a January 2023 Davos conference. Tay is designed to engage and entertain people where they connect with each other online through casual and playful conversation. The European Union (EU) is pushing for regulation of AI content from tech companies like Microsoft, Fortune noted, citing EU Commission Vice President, Vera Jourova. But the wild and disturbing stuff coming out of Tay’s, er, mouth was not limited to things it was told to repeat. Tay is an artificial intelligent chat bot developed by Microsoft's Technology and Research and Bing teams to experiment with and conduct research on conversational understanding. It was also easily exploitable, as you could tell it to “repeat after me” and it would say whatever you said. Over a year after Microsofts 'Tay' bot went full-on racist on Twitter. Microsoft referred to Tay as an artificial intelligence because it was intended to eventually learn to interact organically with people who tweeted at it. (Reuters) - Tay, Microsoft Corp’s so-called chatbot that uses artificial intelligence to engage with millennials on Twitter, lasted less than a day before it was hobbled by a barrage of racist. MSPowerUser Saqib Shah eightiethmnt J9:01 AM It seems that creating well-behaved chatbots isnt easy. The idea behind Tay was a bit more complex than that of your standard Twitter bot. Microsofts Tay comes back, gets shut down again. Since then, much attention has been paid by Microsoft and other businesses to ensure their AI chatbots dont damage their public image. Is a 'Good or Bad' Thing (Exclusive Video) Microsofts Twitter bot, TayTweets rattled the Twittersphere with her sexist, racist, and egocentric tweets. Microsoft, of course, has pulled the plug on Tay (for the moment, at least) just 15 hours after starting it up - and had to delete its overtly racist, misogynist and otherwise messed up tweets.Īlso Read: 'Ex Machina' Director Alex Garland on Whether A.I.

The story of Tay the Twitter Chatbot is short but spectacular: Microsoft introduced Wednesday morning, and hours later it was decrying feminism and the Jews.

0 kommentar(er)

0 kommentar(er)